The Genius of Sonic the Hedgehog Live-Action Movie Viral Marketing Campaign — Popularity by Bad Publicity

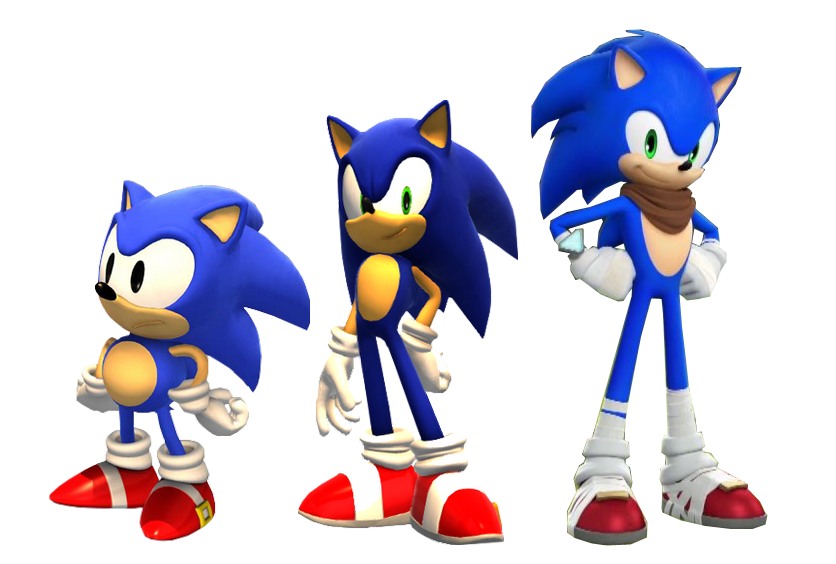

First and foremost: I'm not a big Sonic fan. All I know about Sonic is that there were "two and a half" incarnations on the character in the games:

- 2D. For example in my all-time favorites on Genesis: Sonic the Hedgehog 2 and 3.

- "Classsic" 3D Sonic.

- "Rad" 3D Sonic. One they've been using for the last... 5+ years, I believe.

Compare the three of the well-known designs:

I'm sure this is not news to you. Just like the fact that we now have this one from the April's Sonic the Hedgehog movie trailer:

...you can imagine how the internet reacted to this.

Not. Well.

For the past week the web has been boiling with hate, discussions and alternate design propositions coming from every which way. People obviously care about the "Genuine" Sonic the Hedgehog character enough to get enraged over Hollywood butchering the design.

But... Did they really intend to release this?

You see, Sonic the Hedgehog may be a popular franchise, but it is nothing compared to Pokémon or Mario for example. SEGA's mascot was a hit back when SEGA was: in the 90's and mid 2000's. Nowadays not many people (myself included) actually care about new games starring the familiar cast.

Movie-making is business. You make a movie to cash in. Throughout the history game-related films never really made any large splashes in the box office, so you need the whole internet to find out about your movie. How can you do that?

By deliberately pissing off the fans and publicly announcing to change the design!

How can you tell? Simple. Just take a look at Sonic as he was portrayed in the original trailer which has been viewed over 22 million times already, but was ultimately removed from YouTube as soon as the new Sonic design was announced. But I was still able to grab a juicy screenshot:

It's obvious not much work went into the "bad" Sonic's appearance in the movie: animation is wonky, in several areas he looks as if lazily "photoshopped" in.

At the same time one can tell they tried to hit as many bases as they could to contrast the design with the original as obviously and blatantly as possible:

- hands instead of gloves

- "Nike"-looking shoes instead of signature boots

- blue-haired arms instead of flesh/white-colored ones

- small, non-bridged eyes instead of well-recognized ones

- human teeth (oh my...)

- human-like proportions hitting every checkmark in the "uncanny valley" book.

...and so on. You can't make something like this by accident and have it survive though all stages of expensive pre- and post-production!

So, naturally, people started a real shit-storm on the web and no one really believed that Paramount would listen.

But what's this, Jeff Fowler?

Wow! The Director of the movie replied AND listened to us! He's cool! The movie is now cool! I can't wait to tell my friends about this!

The tweet immediately got some serious traction as you can see from the screenshot (taken on May 3rd).

This, coupled with the fact that hundreds of thousands of people who never cared or even knew about the movie are now well aware of its existence, makes for some kick-ass viral marketing campaign.

Now, certainly I'm not alone thinking that everything that happened was a calculated move.

Still. This is one risky, but effective and efficient PR move. Paramount Marketing team, I tip my fedora to you. Great job!

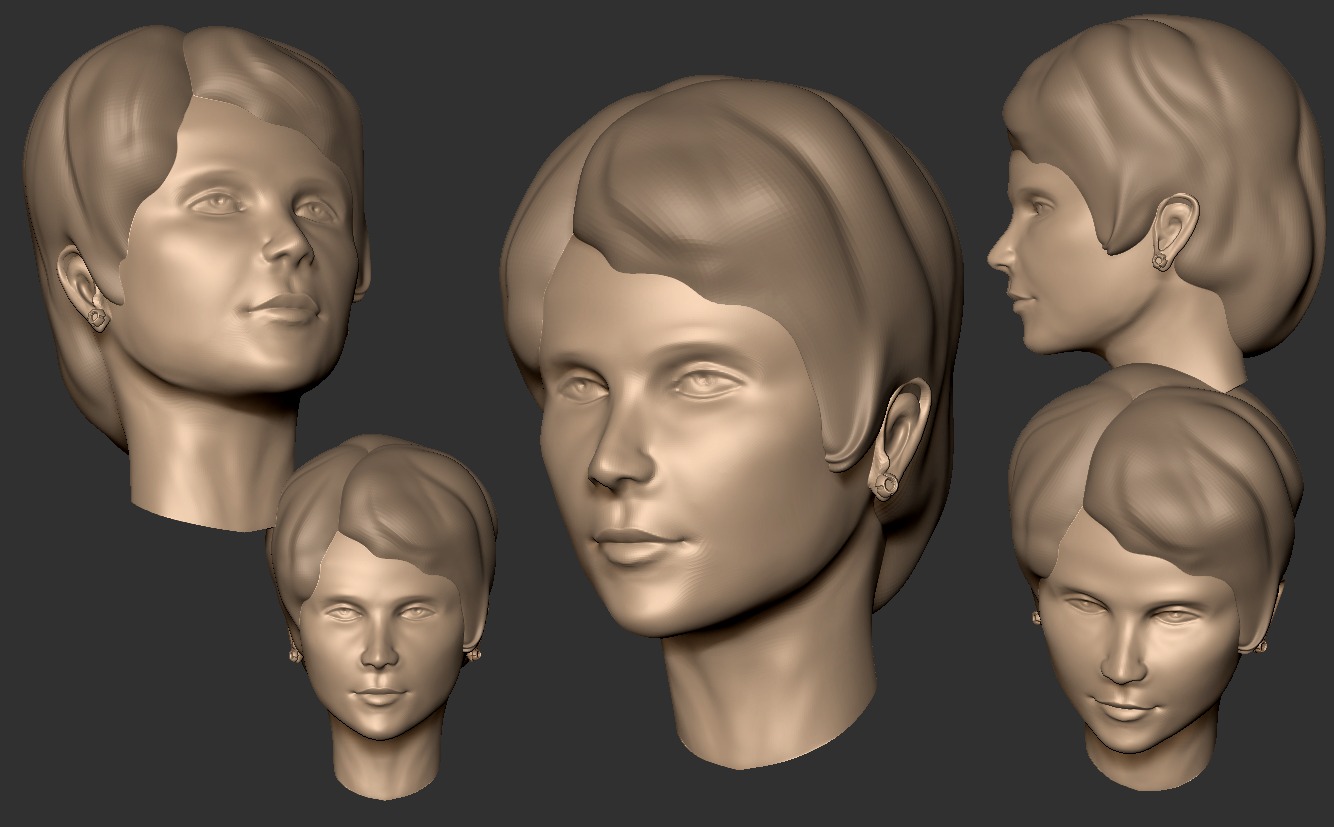

A familiar face

I had so much fun working on this I don't even care it ended up not looking like me at all =P

Yet another random sculpt

It may not be perfect by any means, but a mere year ago I wouldn't even dare to dream about ending up with something like this starting with a sphere without using any reference in just a couple hours. Feels Good Man.

Deep, Meaningful and Possibly Life-Changing Story-Driven Games Everyone Should Play Regardless of Previous Gaming Experience [No Spoilers]

Today we'll once again discuss one of the most remarkable types of media — Video games. Interactive nature of multimedia games makes it possible to tell stories in bold and original ways, allowing players to experience the narratives at their own pace. Or customize the player's experience by providing different story routes or even finales based on one's actions throughout the journey.

Some projects can only work in the form of a videogame, especially those which rely heavily on player choice as well as provide replayability by changing some of the aspects of the game, essentially turning it into an endless experience.

Among the games we play there's a range of particularly impressive narrative-driven titles which tell some fairly complex stories by taking the player through the whole spectrum of emotion: there's no black and white, there are no cliché Hollywood endings, — only non-polarized, deep, sometimes even dark topics put under scrutiny. They raise philosophical questions about the world we live in, the things that make us who we are, the human condition in general and the meaning of it all, without giving pre-digested answers, but rather making the player think, sometimes even causing one to lose sleep over the choices made or events experienced.

I believe these Games are the pinnacle of interactive storytelling and some of them deserve universal praise and simply must be played, — no, experienced by everyone, regardless of age, gender or previous gaming experience. Just like a well-cooked and masterfully spiced meal astounds one with a symphony of taste, these Games deliver some of the most intense experiences one can expect from a multimedia project.

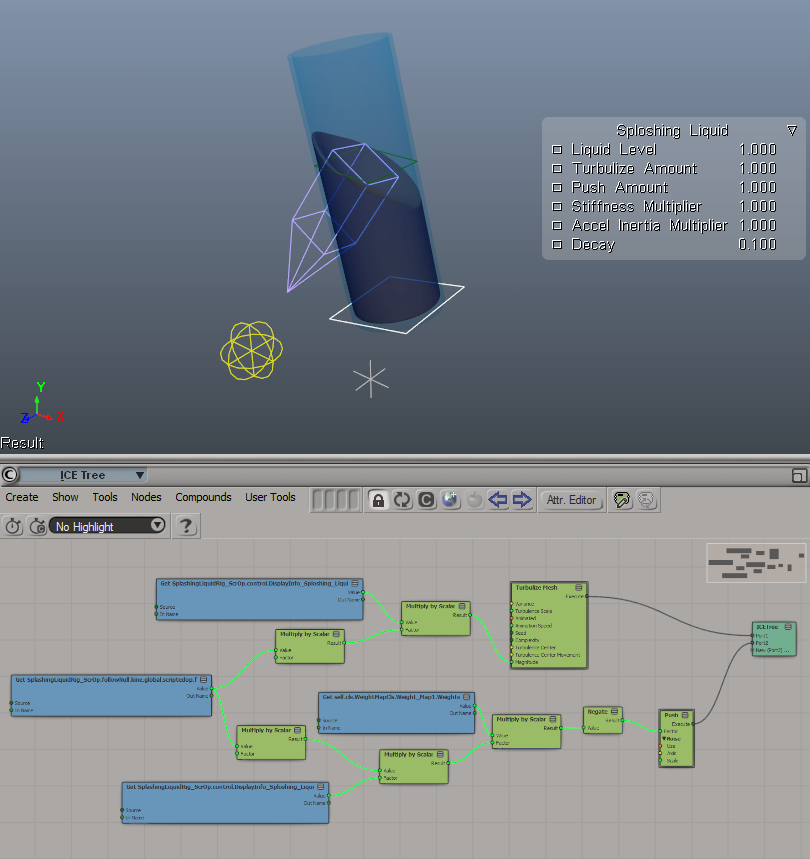

Dynamic Sloshing Liquid Rig. Making It Look Like a Simulation With Nothing but the Awesome Power of Math. Part 1: The Basic Rig

Check this out:

Looks delicious, doesn't it?

What you see here is not a result of a fluid simulation. It's a combination of Linear Algebra and some neat mesh manipulation tricks to make the surface deform and behave as if it were a small body of water in a container reacting to being thrown around a scene, sloshing and splashing back and fourth.

This is what you call a rig. A "sloshing liquid rig" as I decided to name it. Intended to be used in a couple of scenes of the animated short film I'm working on.

This bad boy will save me so much time when I get to animating liquids for background objects.

Let's now dive in and see what's happening under the hood. There's some math involved, but be not afraid: as always, I will try to make it as entertaining as possible and visualize everything along the way.

Bring on the drama!

I'm sorry, but I simply could not resist.

It's just... Dramatic...

[Queue pause...]

TOO DRAMATIC!