You never know until you try, right?

Remember how in this post I was looking for a sculptor?

Well, scratch that.

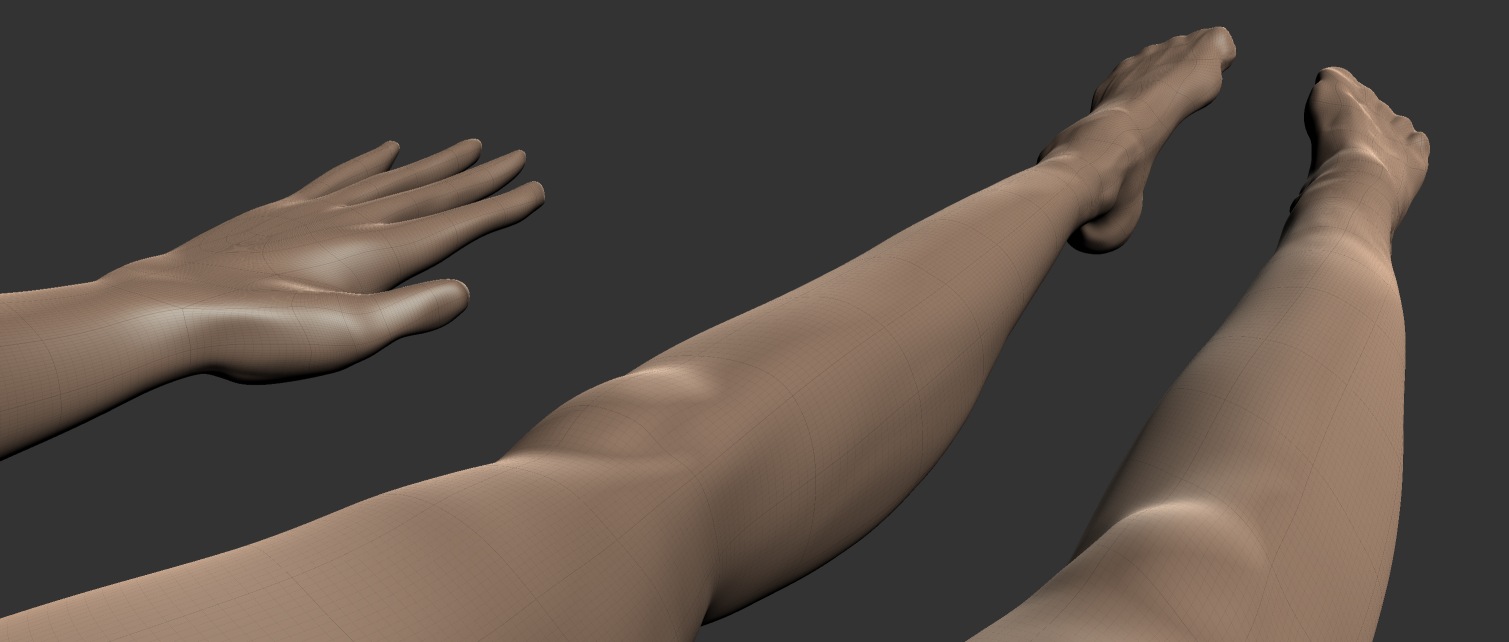

Turns out all I needed to unleash my inner sculptor was a suitable tool and gawd knows it wasn't ZBrush.

Which one was it, exactly? A-ha! Now that is a great idea for a post, isn't it? ;)

Film production and the upcoming blog post series

As promised, I will do my best to document each and every step of the process of the short animated film production (for archival purposes of course, for no one should ever take some weekend scientist's ramblings seriously).

Therefore, I'm starting a series of blog posts under the "Production" category I will gradually fill with new articles along the way.

Preliminaries

Film production is not a new experience for me. I've produced and directed several short films and a couple of music videos over the years with my trusty line of Canon EOS cameras, starting with the very first entry-level EOS 550D capable of recording full HD 24p video.

It has long been superseded by a series of upgrades and as of today - with EOS 750D with a Cinestyle Profile and the Magic Lantern firmware hack.

I have a bit of practice working the cameras including rentals such as RED and Black Magic, the gear, all kinds of lenses, some steady-cams, cranes, mics and such.

Long story short, I produced a couple of stories, which at some point led me to a series of videos for a client that called for a massive amount of planar and 3D-camera tracking, chroma-keying, rig removal and, finally, an introduction of CG elements into footage. It felt like getting baptized by fire and in the end it was what made me fall in love with VFX and ultimately – 3D CGI.

Now what does this have to do with the topic of pure CG film production?

How and why it's different

In traditional cinema you write the script, plan your shots, gather actors and crew, scout locations, then shoot your takes and edit and post, and edit and post, until you're done. You usually end up with plenty of footage available for editing, if you plan ahead well. This is how I've been doing my films and other videos for a long time.

Animated feature production is vastly different from traditional "in-camera" deal. Even if we're talking movies with a heavy dose of CGI (Transformers, anyone?) it's not quite the same since even in this case you're mostly dealing with already shot footage whereas in CG-only productions there's no such foundation. Everything has to be created from scratch. Duh!

The first CG-only "animation" I've produced up to this day was a trailer for my iOS game Run and Rock-it Kristie:

Here I had to adapt and change the routine a bit, but even then 80% of the footage was taken from three special locations in the game I built specifically for the trailer. So basically the trailer was "shot" with a modified in-game follow-camera rig with plenty of footage available for me to edit afterwards.

In a way it was quite close to what I've well gotten used to over the years.

The process

As soon as you decide to go full retard CG, boy of boy, are you in trouble.

Since I'm not experienced enough in the area of animated CG production I'll give a word to Dreamworks and their gang of adorable penguins from the Madagascar and let them describe in detail what it takes to produce an animated film:

Wow that's a lot of production steps...

So in the long run you are free to create your own worlds, creatures and set up stories and shots completely the way you want them to be, the possibilities are limitless. The pay-off is obviously a much more complicated production process which calls for lots of things one often takes for granted when shooting with a camera: people, movement, environments and locations, weather effects and many more objects and phenomena that are either already available for you to capture on film or can be created either in-camera or on set and in post.

Hell, even when it comes to camera work, if you don't have access to the sweet tech James Cameron and Steven Spielberg use when "shooting" their animated features (Avatar, The Adventures of Tintin: The Secret of the Unicorn, respectively) you'll have to animate the camera in your DCC or shoot and track real camera footage for that sweet handheld- or steadycam-look.

Scared yet?

So, all things considered I should probably feel overwhelmed and terrified at what lies ahead. Right?

Nope.

It's a just a Project. And as project manager I thrive on challenge and always see every complication as a chance to learn new skills and follow each and every project to the end. And this one will be no different.

Thank you for reading and stay tuned!

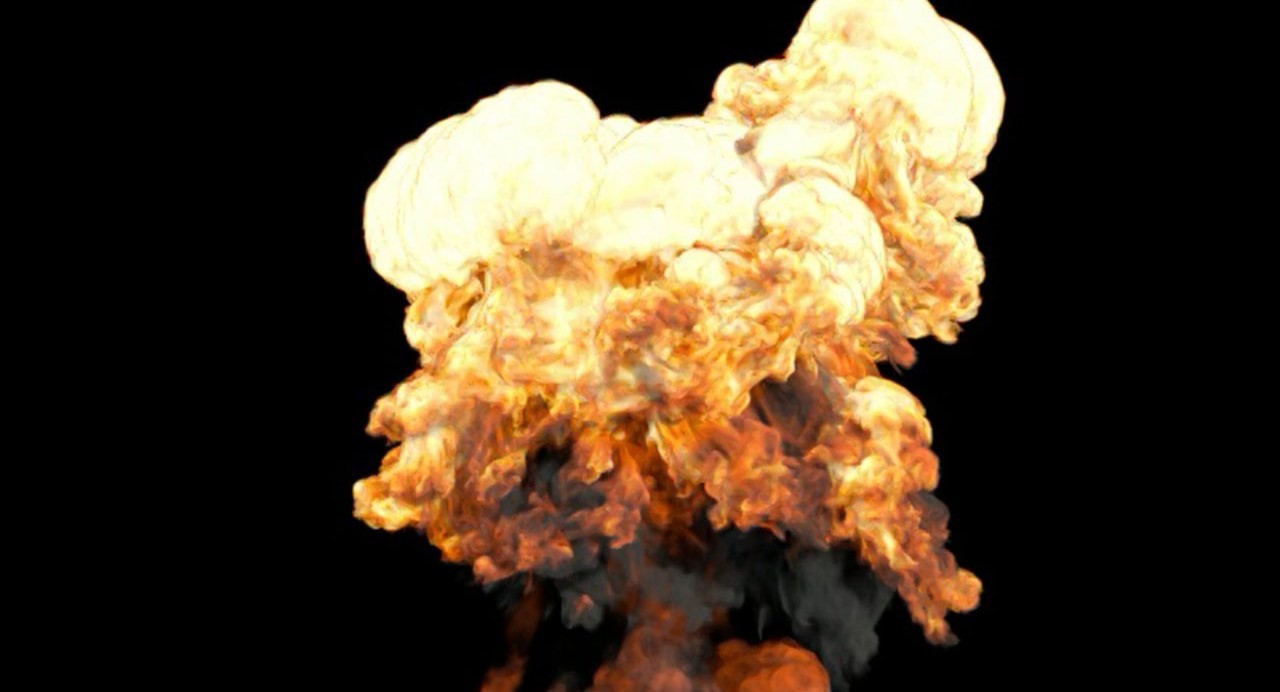

Redshift v2 demo available

After a long period of beta-testing version 2.0.44 of Redshift has finally been made available for everyone to try and play with.

If you don't know what Redshift3D is, it's a biased GPU renderer unlike many other GPU path tracers out there. To put it simply - it's like a supercharged VRay with all biased goodness like irradiance caching, photon mapping and precalculated SSS. Together with the new OpenVDB support it all makes Redshift a blast to work with.

Literally.

Here's what's new in 2.0 VS 1.3:

All V1.3 features PLUS:

- New “Redshift Material” which more closely follows the PBR paradigms (“metalness”, etc)

- New specular BRDFs (on top of the Ashikhmin-Shirley): GGX and Beckmann (Cook-Torrance)

- Nested dielectrics

- Multiple dome lights

- Baking

- Dispersion (part of the Redshift Material)

- OpenVDB Support

- Improved multiple-scattering model (part of the SSS, Skin and Redshift Material)

- Single scattering (part of the Redshift Material)

- Support for alSurface shader

- Linear specular glossiness/roughness response

- Improved photon mapping accuracy for complex materials

- Physically-correct Fresnel for rough reflections

- New SubSurfaceScattering and SubSurfaceScatteringRaw AOV types

- AOV improvements for blended materials

- NVidia Pascal GPU (GTX1070/1080) support

- Automatic memory management

- Better importance sampling of bokeh images

- Texture reference object support (Maya)

You can join the discussion on the official Redshift forums.

Another experiment of CGI integration

Latest CGI-to-live action experiment:

Rendered with Redshift 1.3 for Softimage, composed in After Effects. Mostly polishing the new HDRI map creation pipeline for realistic IBL-rendering with a raytracer. Imrod 3d model taken from TF3DM.

Blizzard uses Redshift to render Overwatch shorts

Did you know that Blizzard used Redshift3D to render some of those Overwatch animated short films? The ones titled "Recall" and "Alive", to be specific.

Things are starting to look better and better for Redshift. I myself am planning to use the renderer to produce a short film I'm currently working on. More info on that later.