NVIDIA and its partners, as well as AAA-developers and game engine gurus like Epic Games, keep throwing their impressive demos at us at an accelerating rate.

These feature the recently announced real-time ray tracing tool-set of Microsoft DirectX 12 as well as the (claimed) performance benefits proposed by NVIDIA's proprietary RTX technology available in their Volta GPU lineup, which in theory should give the developers new tools for achieving never before seen realism in games and real-time visual applications.

There's a demo by the Epic team I found particularly impressive:

Looking at these beautiful images one can expect NVIDIA RTX and DirectX DXR to do more than they are actually capable of. Some might even think that the time has come when we can ray trace the whole scene in real-time and say good bye to the good old rasterization.

There's an excellent article available at PC Perspective you should definitely check out if you're interested in the current state of the technology and the relationship between Microsoft DirectX Raytracing and NVIDIA RTX, which without any explanation can be quite confusing, seeing how NVIDIA heavily focuses on the native hardware-accelerated tech which RTX is, whist Microsoft stresses out that DirecX DXR is an extension of an existing DX tool-set and compatible with all of the future certified DX12-capable graphics cards (since the world of computer graphics doesn't revolve solely around NVIDIA and its products, you know).

So here I am to quickly summarize what RTX and DXR are really capable of at the moment of writing and what they are good (and not so good) for.

The woes of rasterization

As you already know, current generation of GPUs and consoles rely heavily on rasterization when it comes to rendering. It is a very reliable and efficient way of drawing primitives, but it has several shortcomings when it comes to producing realistic-looking renders. Particular issues of classic rasterization methods (which are relevant in our case) are:

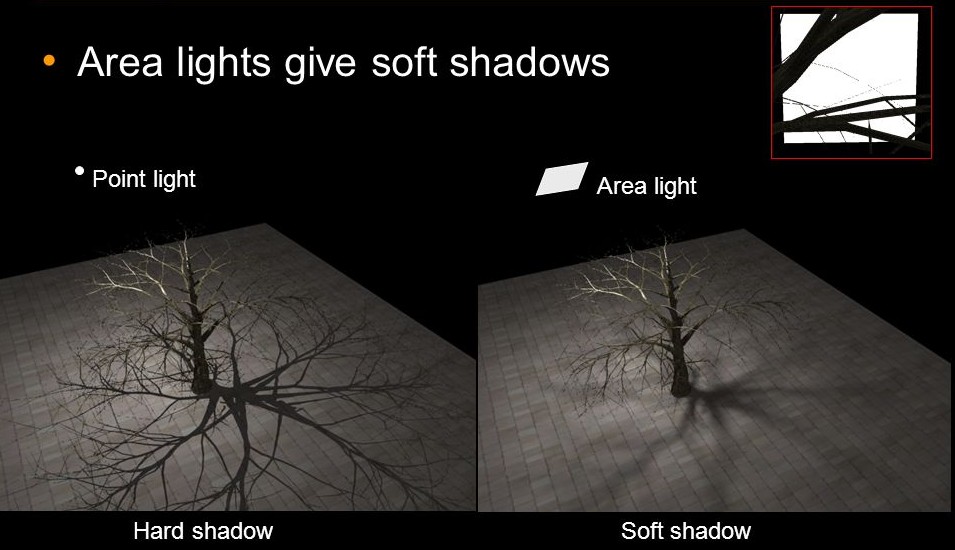

Area-light shadows

Actual shadow-casting light sources are pretty much limited to the two types: infinitely distant (parallel) lights like the Sun, or infinitely small point lights. Therefore the shadows produced by the shadow pass are perfectly sharp and need to be smoothed out to simulate soft lighting. Smoothing doesn't usually take physical light source dimensions and distance into consideration, which means no shadow penumbra. Nowadays there exist workarounds which try to emulate soft and area lights by smoothing the shadow pass based on the distance of a particular sample to the light source, but there's only so smoothing you can do until it starts to show artifacts.

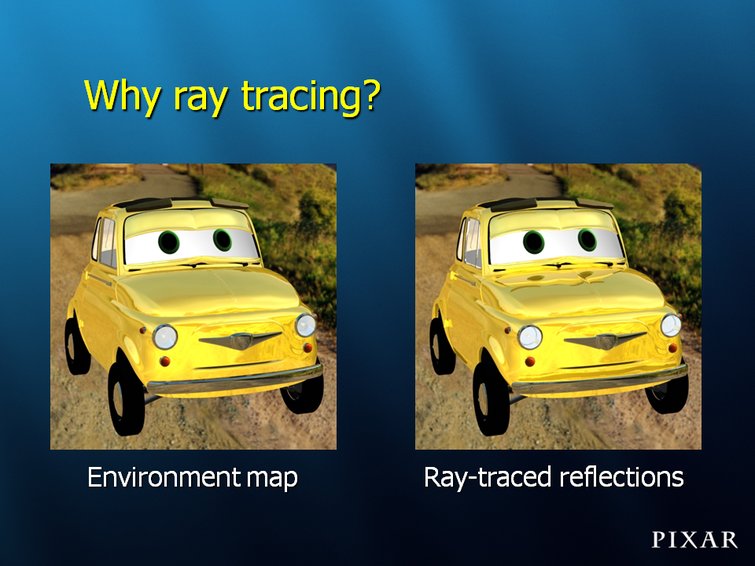

Reflections

Reflections are a real problem. Since we are rasterizing primitives and not tracing the actual light rays the machine has no idea what a reflection is at all. Workarounds include the use of cube maps (classic), and recently – reflection probes. There is also screen-space reflection technique (SSR) which I personally can't stand for the mere fact of its utter unreliability, plethora of shortcomings and tendency to completely break immersion by failing randomly and unexpectedly.

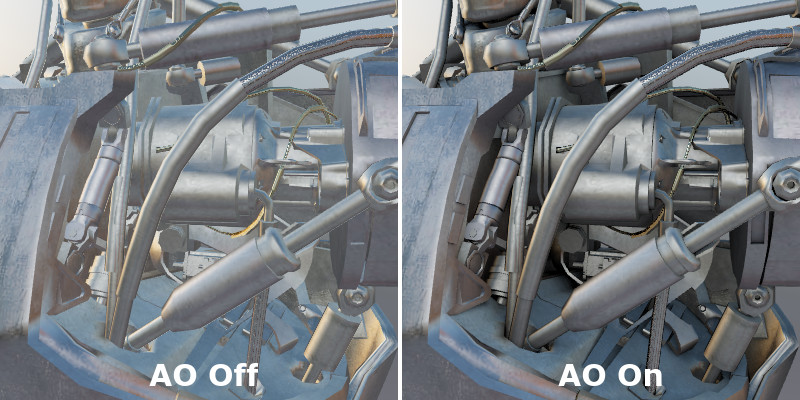

Ambient occlusion

This is a pretty specific lighting case, but as it turns out, it really helps to "ground" objects in the scene and seriously adds to realism. The only workaround is to actually trace the rays. Commonly – in screen-space using the existing Z-Depth pass.

As it stands today, NVIDIA RTX and DirectX DXR are designed to primarily address these issues and not to be used for real-time full-scene ray tracing.

What I mean is all these promotional videos and press-releases can be quite misleading, so it's useful to understand the limitations of the technologies. And limitations and trade-off of RTX and DXR are what one should be absolutely aware of.

Allow me elaborate.

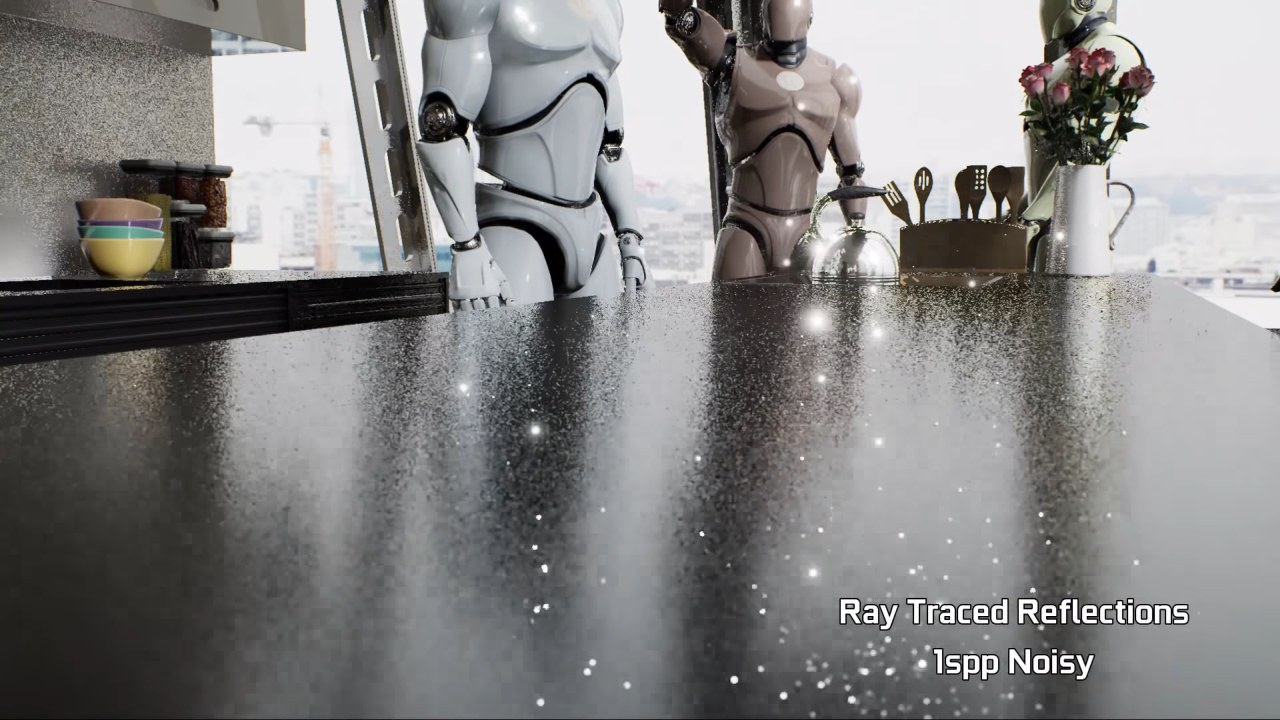

The woes of ray tracing

Ray tracing is expensive. Like really expensive and it gets progressively more expensive the higher the resolution of the resulting render and the number of shadow-casting lights and reflective/refractive surfaces. Even the most powerful graphics hardware will struggle to trace with more than 1 to 3 samples per pixel for a 1080p-frame at a rate of 30 to 60 times per second. There's only so many rays you can throw into the scene every second and you have to manage this budget really well, otherwise you'll end up with a useless noisy mess or a slide-show.

So what you need to improve the situation is a ray tracer with the "cheapest" rays (that is one with as little of an overhead per ray as possible). Luckily, in case of NVIDIA we have OptiX, "an application framework for achieving optimal ray tracing performance on the GPU. It provides a simple, recursive, and flexible pipeline for accelerating ray tracing algorithms". This framework is tailored to NVIDIA GPUs in such a way to be able to perform tracing operations as quickly as the hardware would allow.

And yet, it's still not quite enough!

Here's the official RTX demo by NVIDIA:

Did you see the noise in the unfiltered renders? Oh, yeah... That's what you get with 1 sample per pixel.

But is this something to be expected of a real-time RT technology? Absolutely.

The beauty of a proposed solution (and a vital part of it) is the new "AI-Accelerated denoiser" shipped with OptiX, which acts as sort of a "temporal" noise filter. It collects sample data over several frames, averages it and applies smoothing, ultimately allowing to get more or less clear results which are very close to the "ground truth" (that is result which would be achieved by actually tracing the scene with lots of samples per each pixel).

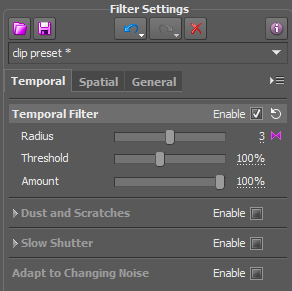

Reminds me of a similar technique implemented in some of the video denoise plug-ins like Neat Video called "Temporal Filter" which produces great results in most cases. So great in fact, that even traditional per-frame "Spatial" filtering becomes redundant: the denoiser collects enough data over several frames to be able to tell what's happening in the video and separate noise from actual detail. Temporal filtering eliminates flickering since data over several frames is averaged and noisy parts filled with the recovered information.

Neat Video Temporal Filter. Radius means the number of frames to analyze before and after the frame being processed

Neat Video Temporal Filter. Radius means the number of frames to analyze before and after the frame being processed

But I digress. Back to OptiX.

This "AI-Accelerated" denoiser is pretty cool. It is even able to detect and subdue the bane of any path tracer – specular fireflies – from the render. This is particularly noticeable in the true path tracing mode of OptiX (see closer to the end of the video): the longer you let the image converge (and it doesn't take too many samples for the denoiser to produce a clean image), the cleaner the result and the less fireflies and other render defects there are.

But, in this true path tracer mode it doesn't seem like it would be possible to have a "temporal temporal denoising" of sorts, where you'd be able to get a series of converged and denoised static path traced renders and then blend and denoise those again to eliminate flickering and such to end up with a smooth and sexy movie. This is a fundamental problem of stochastic path tracers and as far as I know, the only way to effectively fight it is during the post-processing stage. That or by throwing more rays into the scene, whichever is more practical in any specific case. Otherwise there's a high probability to render out a movie with low-frequency "swimming" noise as a result of the "AI" denoiser treating every frame independently from the others when trying to converge on the image with a limited supply of actual sampled data per pixel.

Sounds like the kind of issues you may come across when doing Irradiance Caching, if I may draw such an analogy.

So as far as I see it, RTX (in case of real-time ray tracing) it's a sophisticated temporal point sample denoiser coupled with sparse (really sparse, like 1 SPP sparse) ray traced data designed to cut frame conversion time to values acceptable for real-time applications.

I have no idea whether denoising technology of any kind will also be available with DirectX DXR, though. After all, DXR is meant to be compatible with all DX12-certified GPUs and those which are not developed by NVIDIA would not have access to the RTX tech and would need to have access to some sort of a similar temporal denoiser when performing DirectX DXR tasks.

Otherwise these unlucky ones without an NVIDIA GPU would just end up with a useless noisy 1 SPP ray traced render. That's nooo good!

Still, going back to the video, at 4:55 you can clearly see flickering that occurs at the edges of distant objects. This is an inevitable side-effect of rendering with a low SPP: you never know what surface you're going to hit in the scene in the next frame. No matter how many frames you blend the machine won't be able to tell edges from any homogeneous surface. This is actually a very important observation I will address below – the fact that what is being traced is the overall scene result. Which makes sense: with such a low SPP count it's impossible to clearly trace polygon edges.

The compromise: a hybrid rendering pipeline

There is a reason I mentioned object edges in the above passage. Ray tracing object edges to minimize flickering and aliasing takes time. Especially if we're talking about small triangles, like leaves on a tree or blades of grass.

You need more samples at the edges of polygons to avoid aliasing and flickering

You need more samples at the edges of polygons to avoid aliasing and flickeringTherefore real-time noise-free full-scene ray tracing is out of the question. I mean, hell, we have to make due with 1 SPP for specular sample, 1 SPP for shadow sample and 1 SPP for AO each frame! 30 or more times per second. And also analyze those and perform temporal smoothing.

So what do we do?

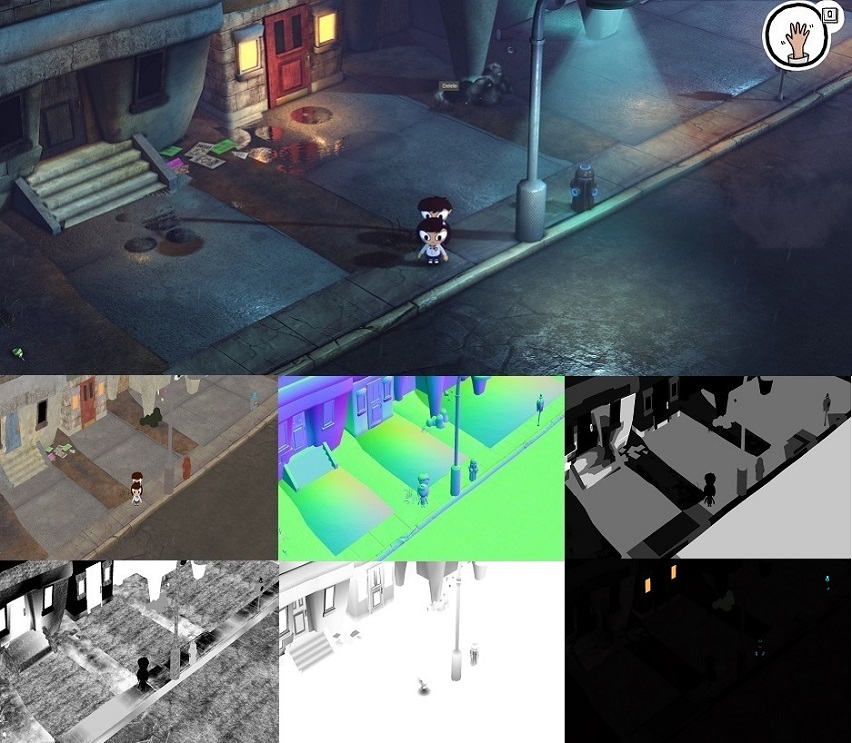

We render and combine passes!

Look, we already do deferred rendering in interactive applications and games. Let's combine the performance of the current OGL/DX rasterization techniques and post-processing methods like temporal anti-aliasing and augment those with a couple of actual ray traced passes, like specular, AO and shadow ones!

This way we'll be getting the best of the both worlds: a clean anti-aliased rasterized render with physically-based shaded materials (including a noise-free lit diffuse pass) and ray traced shadows, reflections (including rough ones!) and ambient occlusion.

In this case RTX/DXR are only used to produce passes (render targets) without having to even know about object edges and such. Just ray trace, clean up and give us the pass to place over the already rasterized ones.

Which is, basically, how they are supposed to be used by design.

Still limited, but does its job well

Since the release of this new technology many people kept pestering developers and asking whether RTX and/or DXR could be integrated into their favorite offline GPU renderer. I was one of them and I'm ashamed to have jumped the hype train.

Long story short, RTX/DXR are what they are: fast ray tracing techniques full of compromises, cheats and limitations aimed at making practical real-time ray tracing possible at all.

Panos Zompolas, one of the developers of the Redshift renderer had this to say to answer most of the questions in one go. Here's a short version:

One question we’ve been getting asked a lot is “will this tech make it into Redshift”? The answer to any such tech questions is: of course we’ll consider it! And, in fact, we often do before we even get asked the question!

The first misconception we see people fall victim to is that these demos are fully ray traced. I’m afraid this is not the case! These demos use ray tracing for specific effects. Namely reflections, area lighting and, in some cases, AO. To our knowledge, none of these demos ray traces (“path traces”) GI or does any elaborate multi-bounce tracing.

The second misconception has to do with what hardware these demos are running on. Yes, this is Volta (a commercial GPU), but in quite a few of these cases it’s either with multiple of them or with extreme hardware solutions like the DGX-1 (which costs $150000).

The third misconception is that, if this technology was to be used in a production renderer like Redshift, you’d get the same realtime performance and features. Or that, “ok it might not be realtime, but surely it will be faster than today”. Well… this one has a slightly longer answer…

The main reason why a production renderer (GPU or not) cannot produce “true” realtime results at 30 or 60fps isn’t because you don’t have multiple Voltas. The reason is its complicated rendering code - which exists because of user expectations. It simply does too much work. To explain: when a videogame wants to ray trace, it has a relatively simple shader to generate the rays (reflection, AO, shadow) and relatively simple shaders to execute when these rays hit something in the scene. And such a renderer typically shoots very few rays (a handful) and then denoises. On the other hand, when a renderer like Redshift does these very same operations, it has to consider many things that are not (today) necessary for a videogame engine. Examples include: importance-sampling, multiple (expensive) BRDFs, nested dielectrics, prep work for volume ray marching, ray marching, motion blur, elaborate data structures for storing vertex formats and user data, mesh-light links, AOV housekeeping, deep rendering, mattes, trace sets, point based techniques.

A videogame doesn’t need most of those things today. Will it need them a few years from now? Sure, maybe. Could it implement all (or most) of that today? Yeah it could. But, if it did, it’d be called Redshift!

Shocking, right? Software solution aimed at providing real-time performance at any cost not being feature-rich enough for a production renderer?

Of course, not.

Hence RTX/DXR do what existing GPU ray tracers have been doing for the last 10+ years, just slightly quicker (which actually needs proof) and in the form of a ready to use solution (to be) available in most game engines.

We've had specular, AO and shadow AOVs in our offline GPU renderers since the first day they were released and they are already damn good at those!

Need proof? No problem, just render any scene with 1 (one) sample per pixel with your favorite renderer and awe at the amazing render speed! Now process a series of renders with a temporal denoiser and you've got your RTX/DXR.

So discussing the use of RTX/DXR in offline renderers is quite pointless. Instead, think about results you can get in your interactive visual apps and games now that you finally have access to ray traced shadows, reflections and AO.

But what about refractions?

In all of the demos currently available I've noticed the lack of transparent and refractive materials. Transparency has long been an issue in deferred rasterized rendering and ray tracing could be the prime candidate for solving this issue.

What is even more peculiar is that NVIDIA actually teased us with real-time ray tracing demos back in 2012 when they demonstrated ray traced refractive materials:

I wonder why there are still no demos available featuring NVIDIA RTX and/or DX DXR and refractive materials. Sure, refraction is expensive and there's nothing you can do but to actually trace the rays. I don't know whether it's because the trace depth required might make it too difficult to reliably use in real-time applications. I wonder if this will change...

Bottom line

NVIDIA RTX and DirecX Raytracing (DXR) present the first step towards standardized real-time ray tracing in interactive applications, games and maybe even films.

The most important thing to understand about these technologies is that they were developed exclusively and specifically for real-time visuals and as they stand today, mostly exist to augment already widespread rasterization techniques by more or less solving long-standing issues, such as sharp reflections, area-light shadows and realistic ambient occlusion.

For me, the most important achievement are ray traced reflections which even support materials with various levels of glossiness. Realistic reflections seriously contribute towards realism. And while AO and soft shadows could to a certain degree be successfully approximated with various techniques prior to the announcement of RTX and DXR, reflections were a real pain in the ass.

Luckily, the time has come to bid cube-mapped, reflection probed and (especially) screen-space reflections good bye.

How awesome is that?