(Revised and updated as of June 2020)

Preamble

Before reading any further, please find the time to watch these. I promise, you won't regret it:

Now let's analyze what we just saw and make some important decisions. Let's begin with how all of this could be achieved with a "traditional" 3D CG approach and why it might not be the best path to follow in the year 2020 and up.

Linear pipeline and the One Man Crew problem

I touched upon this topic in one of my previous posts.

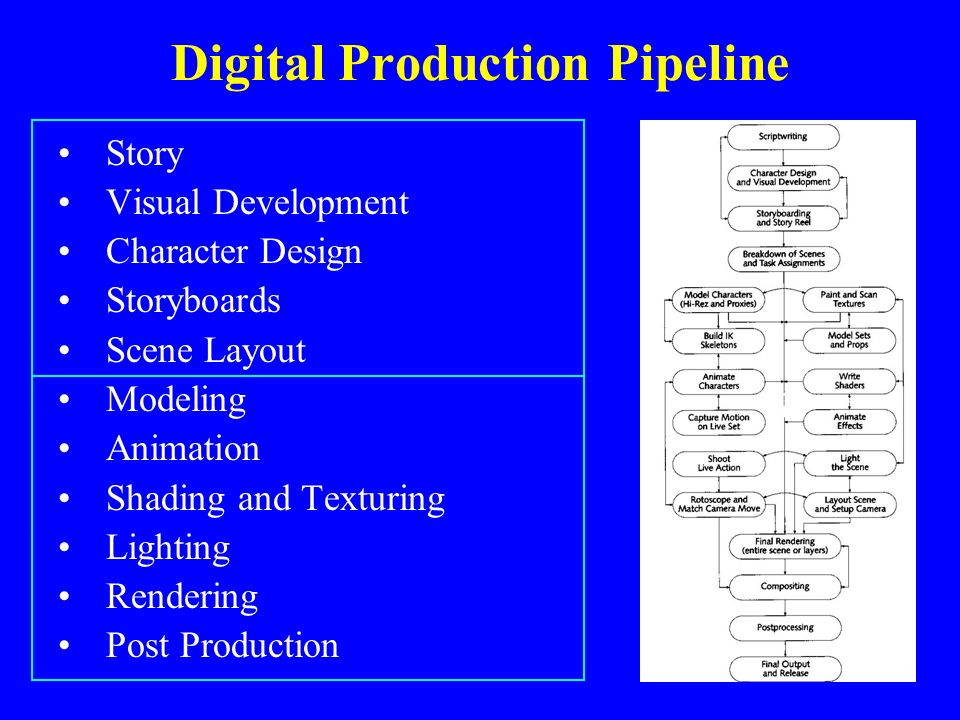

The "traditional" 3D CG-animated movie production pipeline is quite complicated. Not taking pre-production and animation/modeling/shading stages into consideration, it's a well-known fact that an A-grade animated film treats every camera angle as a "shot" and these shots differ a lot in requirements. Most of the time character and environment maps and even rigs would need to be tailored specifically for each one of those.

Shot-to-shot character shading differences in the same scene in The Adventures of Tintin (2011)

Shot-to-shot character shading differences in the same scene in The Adventures of Tintin (2011)For example if a shot features a close-up of a character's face there is no need to subdivide the character's body each frame and feed it to the renderer, but it also means the facial rig needs to have more controls as well as the face probably requires an additional triangle or two and a couple of extra animation controls/blendshapes as well as displacement/normal maps for wrinkles and such.

But the worst thing is that the traditional pipeline is inherently linear.

Thus you will only see pretty production-quality level images very late into the production process. Especially if you are relying on path-tracing rendering engines and lack computing power to be able to massively render out hundreds of frames. I mean, we are talking about an animated feature that runs at 24 frames per second. For a short 8-plus-minute film this translates into over 12 thousand still frames. And those aren't your straight-out-of-the-renderer beauty pictures. Each final frame is a composite of several separate render passes as well as special effects and other elements sprinkled on top.

Now imagine that at a later stage of the production you or the director decides to make adjustments. Well, shit. All of those comps you rendered out and polished in AE or Nuke? Worthless. Update your scenes, re-bake your simulations and render, render, render those passes all over again. Then comp. Then post.

Sounds fun, no?

You can imagine how much time it would take one illiterate amateur to plan and carry out all of the shots in such a manner. It would be just silly to attempt such a feat.

Therefore, the bar of what I consider acceptable given the resources available at my disposal keeps getting...

Lower.

There! I finally said it! It's called reality check, okay? It's a good thing. Admitting you have a problem is the first step towards a solution, right?

Right!?..

Oups, wrong picture

Oups, wrong pictureAll is not lost and it's certainly not the time to give up.

Am I still going to make use of Blend Shapes to improve facial animation? Absolutely, since animation is the most important aspect of any animated film.

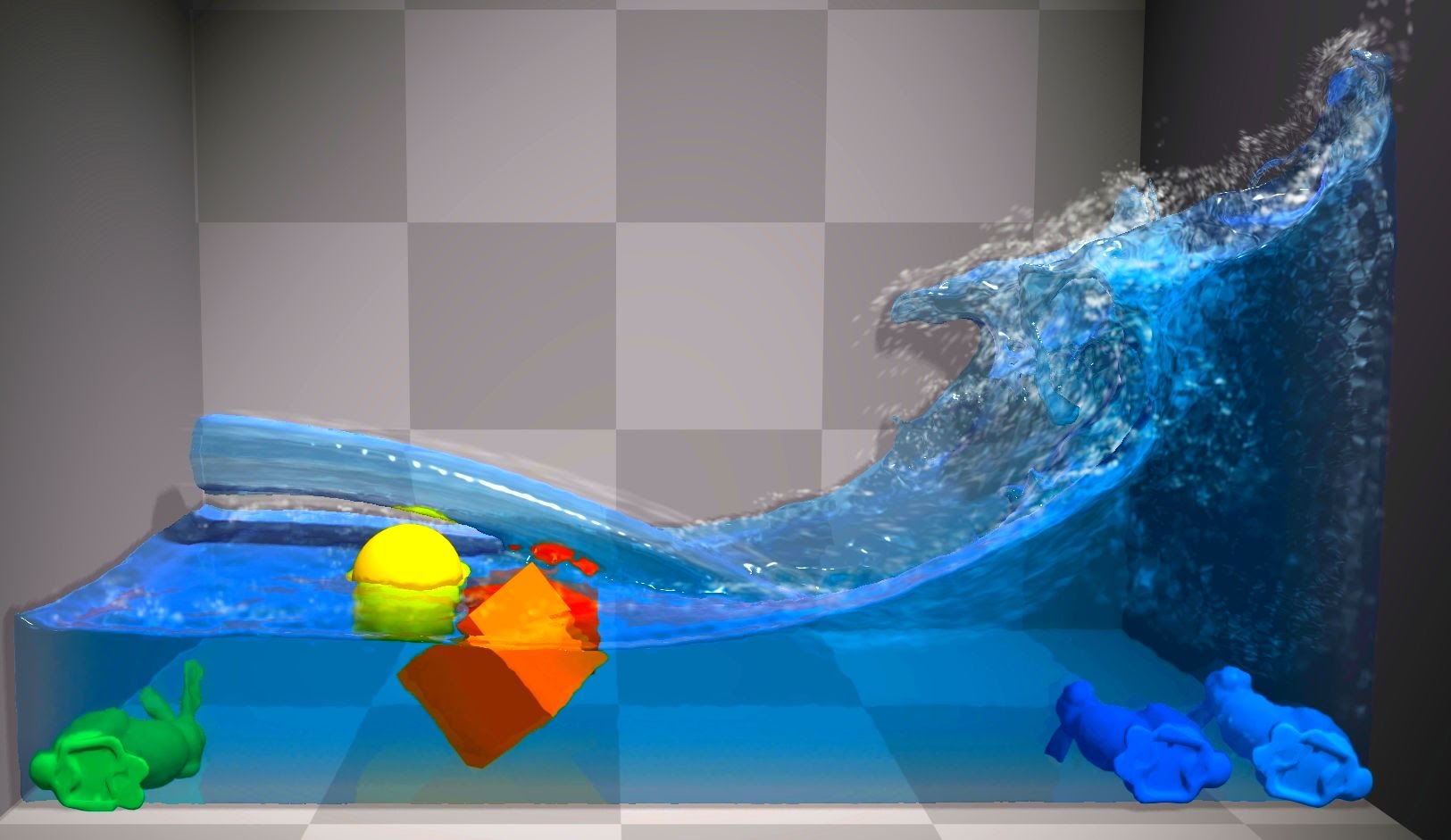

But am I going to do realistic fluid simulation for large bodies of water (ocean and ocean shore in my case)? No. Not any more. I'll settle for procedural Tessendorf Waves. Like in this RND I did some time ago:

Will I go over-the-top with cloth simulation for characters in all scenes? Nope. It's surprising how often you can get away with skinned or bone-rigged clothes instead of actually simulating those or even make use of real-time solvers on mid-poly meshes without even caching the results... But now I'm getting a bit ahead of myself...

Luckily, there is a way to introduce the "fun" factor back into the process!

And the contemporary off-the-shelf game engines may provide a solution.

Extreme bloom and puke-brown make everything better (not really)

There was the time (mainly in the DirectX 7-9 era, up to about year 2011-2013) when GPU hardware and software as well as consoles were constantly getting better, but were not quite "there yet". Materials were... okay, but not quite on par with ray-traced ones, lighting was mostly either pre-baked or simplistic, and image post-processing was in its early stages so that processed frames would often get that "blurry" look many consider the worst thing that ever happened to games during that era.

Remember The Elder Scrolls: Oblivion?

This gamma-lit oversaturated bloom is forever burned into my retina after spending hours playing the game...

This gamma-lit oversaturated bloom is forever burned into my retina after spending hours playing the game...Or Deus Ex: Human Yellow Revolution?

I think people at Eidos have issues with color calibration on their monitors

I think people at Eidos have issues with color calibration on their monitorsOr Grand Theft Auto IV?

GTA 4 was also one of the infamous "brown"-tinted games

GTA 4 was also one of the infamous "brown"-tinted gamesThere actually were jokes and comic strips circling around dedicated to these early gamma-space post-processing experiments:

Comic by VG Cats

Comic by VG Cats"Are you sure these are real-time?.."

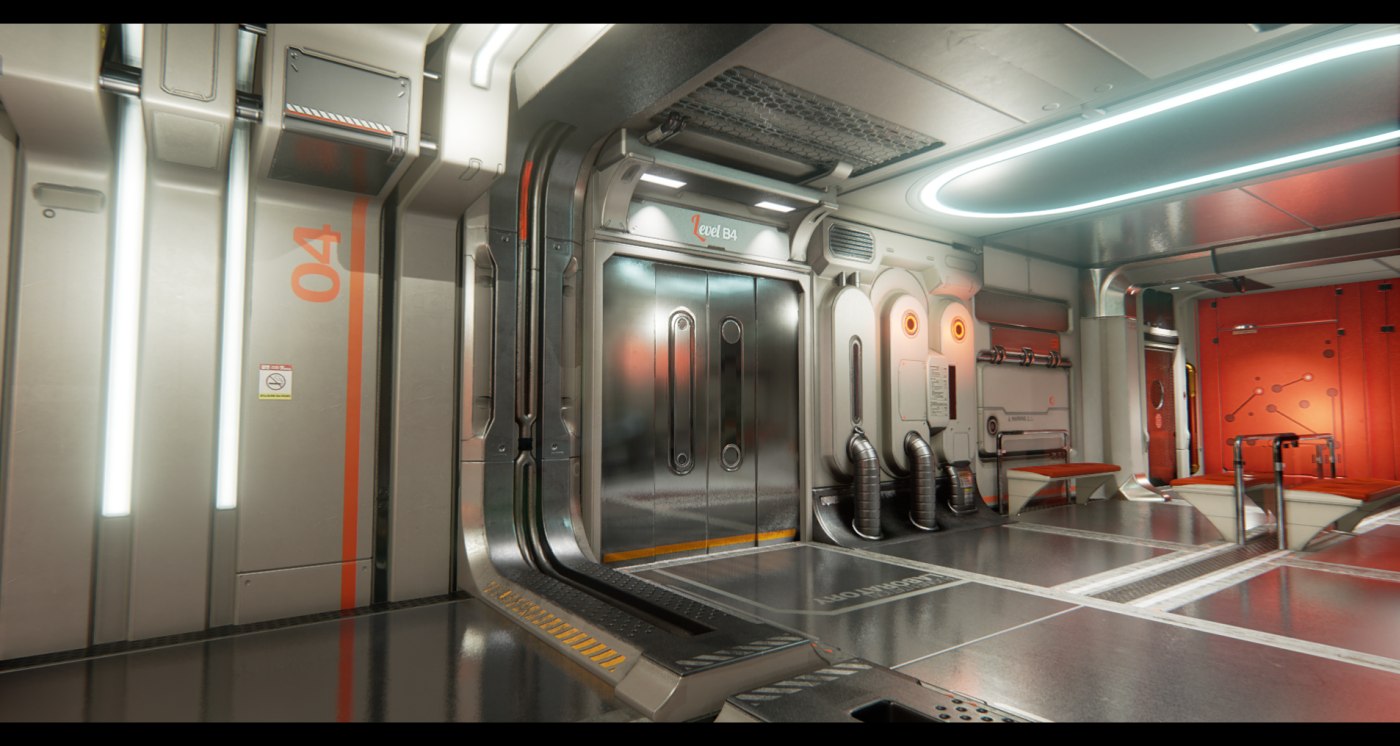

Then the new generation of consoles happened. And, boy, did they deliver. Just look at these:

Ryse: Son of Rome:

Ryse: Son of Rome looks absolutely stunning

Ryse: Son of Rome looks absolutely stunning Ratchet & Clank (PS4):

Ratchet & Clank looks like an A-grade animated film all the way from the very beginning to the end

Ratchet & Clank looks like an A-grade animated film all the way from the very beginning to the endAlien: Isolation:

Alien: Isolation is my most favorite horror title of all time and it looks amazing

Alien: Isolation is my most favorite horror title of all time and it looks amazingHorizon Zero Dawn:

Horizon Zero Dawn is a true masterpiece when it comes to looks and color grading

Horizon Zero Dawn is a true masterpiece when it comes to looks and color gradingThis massive leap in quality partially became possible thanks to the hardware getting noticeably more performant, but most importantly – due to game studios and engine developers getting lots of experience working with real-time 3D CG and making use of the latest developments done in OGL, DirectX and console GPU rendering pipelines. Ultimately the whole industry "matured" enough so that most of these milestones would become available in the most popular off-the-shelf game engines like Unity, Unreal Engine 4 and CryENGINE 3.

The more time I spend disassembling and studying those amazing real-time demo samples from Unity, Crytek and EPIC teams, the more it makes me want to "jump ship". That is, instead of doing everything in the "traditional" 3D Digital Content Creation package like Blender, Softimage or Cinema 4D, and then rendering those pretty images on the GPU with Redshift as a true path tracer, it seems like many of the scenes of the movie I'm working on could be instead rendered directly on the GPU in OGL or DX11/12 modes in more or less real-time.

Show me whatcha got

Look what we (when I say "we", I mean unskilled and naive 3D CG amateurs) have at our disposal when it comes to real-time engines nowadays:

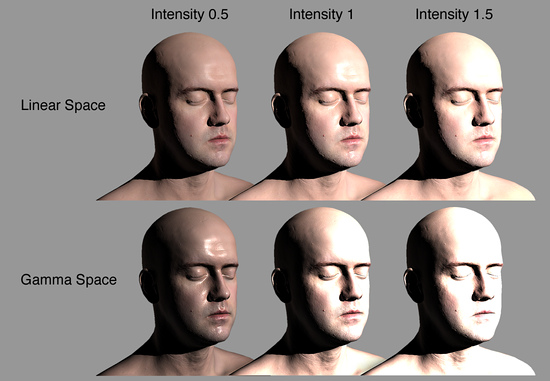

Linear, not Gamma-lit rendering

Looking at the last four screenshots you may have noticed the massive bump in lighting and shading quality in AAA-titles released after the year 2013(-ish). The industry finally decided to let gamma-space lighting go and embraced linear lighting for high-budget titles.

If you are not familiar with the term, there's an excellent article by Filmic Worlds you should absolutely check out.

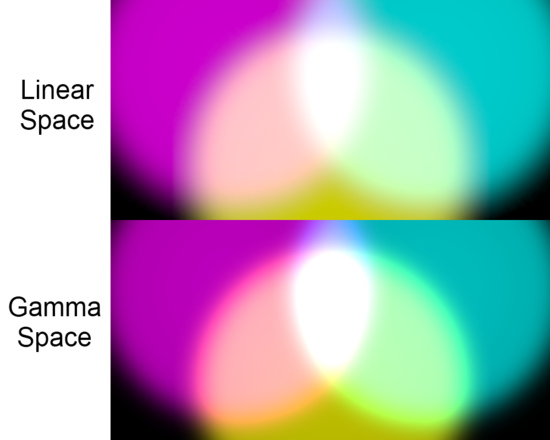

See these glowing edges? Mixing colors in gamma space is a terrible idea.

Linear lighting processing makes working with materials and lighting very straightforward and the results – predictable. It is also what makes HDR processing possible at all.

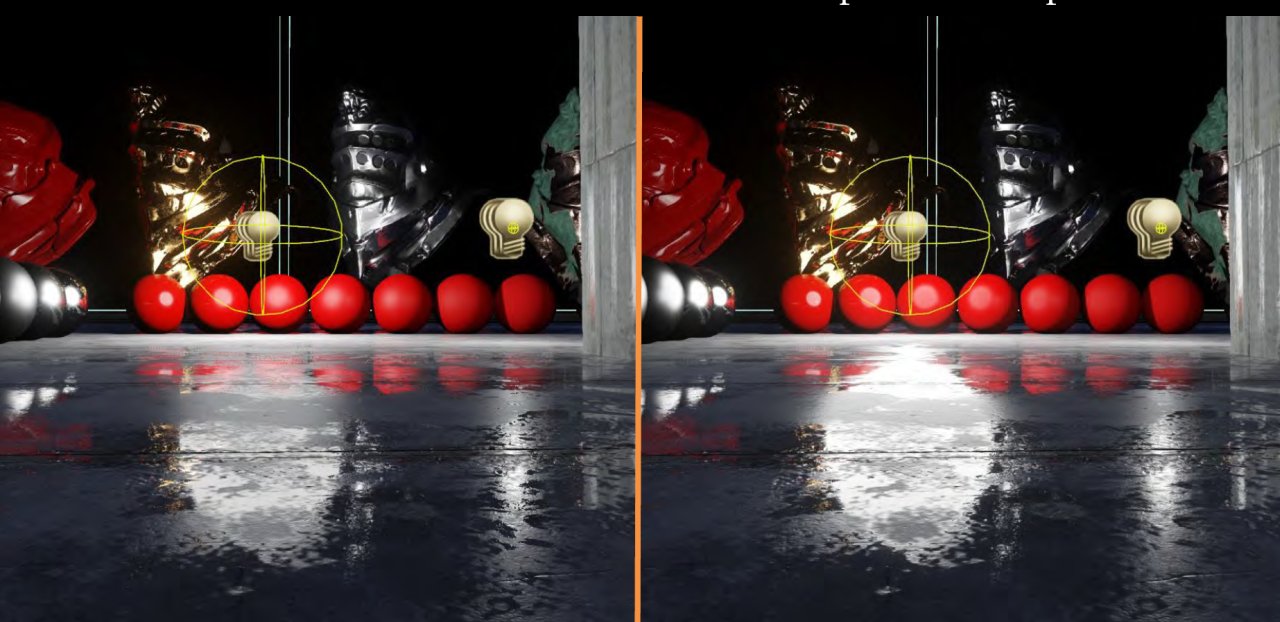

Real-time PBR shading model

It wasn't long until we finally ended up with an excellent mathematical approximation of the original Disney's Physically Based Shading model.

Reference (left) vs real-time PBR approximation (right) comparison from the UE4 SIGGRAPH 2013 presentation

Reference (left) vs real-time PBR approximation (right) comparison from the UE4 SIGGRAPH 2013 presentationThanks to PBR, with enough GPU memory you can pretty much achieve almost the same-looking results shading-wise as with real ray-tracing, and that includes such surface properties as surface and reflection roughness, HDR specular highlights, anisotropy, and metalness.

Scene/order-aware transparency and refraction can still present problems. It should also be noted that generally refraction effects are limited to being able to refract objects/environment behind the one being shaded but not the object itself:

Displacement, albeit not very common, is possible with real-time object tesselation, but it's usually more efficient to make use of Parallax Mapping effects, especially considering that latest implementations can write to the depth buffer as if geometry was actually displaced and even support self-shadowing:

Translucency effects can be efficiently faked most of the time:

Overall, current implementation of Physically Based Shading model allows for a variety of realistic materials and, most importantly, properly set-up materials will generally look "correct" under most lighting conditions.

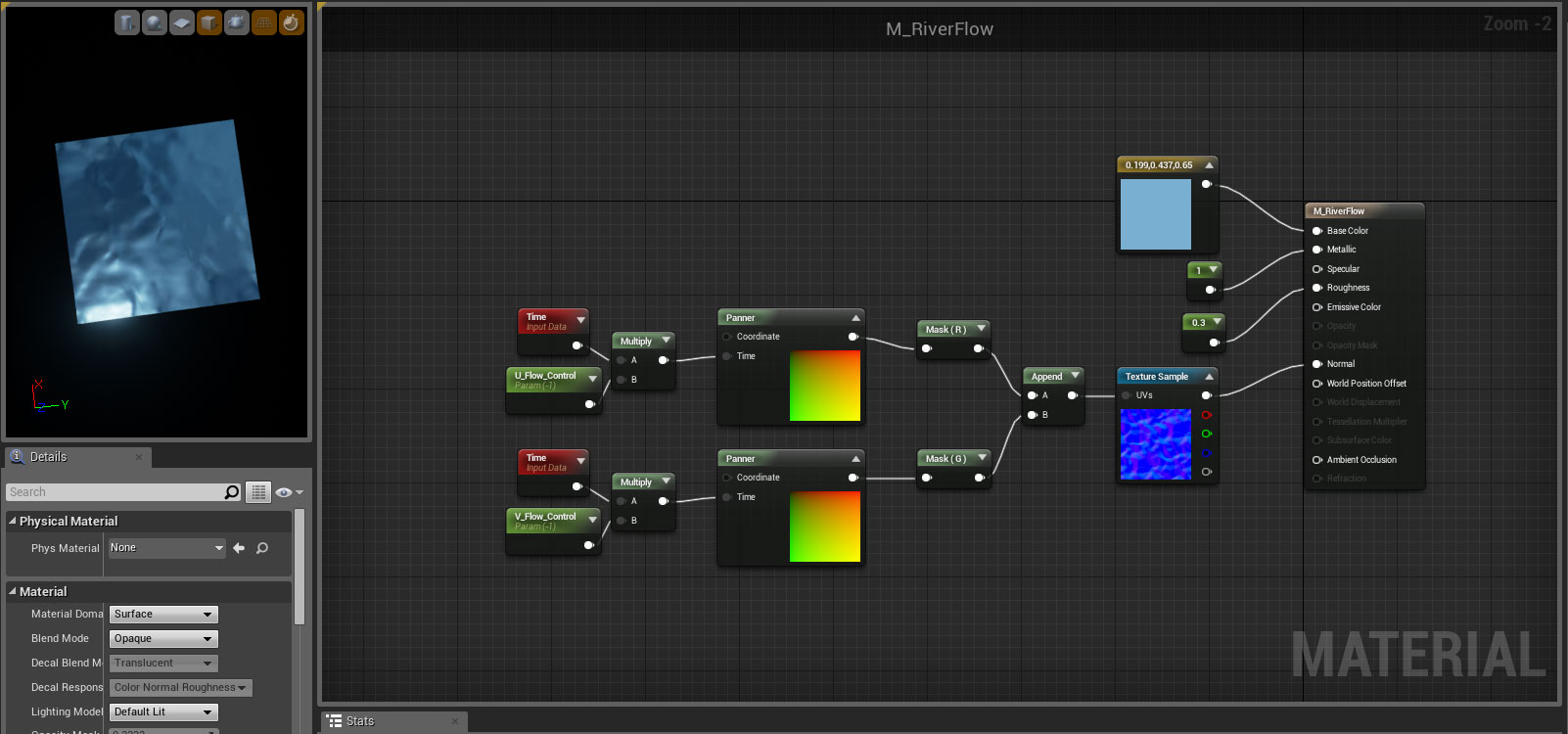

Material (shader) editor

Good old node-based material editor

Good old node-based material editorHard to imagine a material authoring pipeline without a node-based editor like the one most of us have long gotten used to over the years in our DCCs. Unreal Engine provides one out of the box, whilst Unity doesn't (although Unity team recently announced that they would finally get to building one). But there exists an asset called Shader Forge, well-known among Unity crowd, which is basically an incredibly powerful material/shader editor. So no problems in this area as far as I can tell.

Global Illumination (both real-time and precomputed)

Several game engines support either precomputed static Global Illumination and/or its real-time implementations. "Real" per-pixel GI is very difficult to calculate even with the fastest GPUs, so simplifications are necessary. Of all methods of real-time GI I find voxel-based ones the most straightforward way to implement GI in interactive applications. Not games, though. Not yet at least.

At one moment in the past (2014-ish) UE4 was going to have a killer feature in the form of a voxel-based dynamic GI, but it ultimately got scrapped. Probably due to UE4 known of being able to render huge worlds. And voxel-based... anything doesn't play nice with large-scale scenes at all. I swear there was an official EPIC demo on this, but I can't find it anywhere. As of May, 2020 UE4 does actually have a dynamic GI solution, but it utilizes a path-tracing approach you can read about a bit further below.

Still, 3-rd party solutions exist, and one of those is called SEGI for Unity and another – NVIDIA VXGI for Unreal Engine which is actually a hardware feature of the latest generations of NVIDIA GPUs (so it's not strictly limited to just UE).

GI makes any scene look better. Period.

GI makes any scene look better. Period.Voxel Cone-Traced GI is very taxing performance-wise but it does provide you with smooth real-time GI and some implementations can even calculate "infinite" bounces. There are inherent issues like light leaking and such but if we are going to render animated films and not games where the player can go anywhere, these problems can be solved on per-scene or per-shot basis.

Needless to say, GI is absolutely essential for any type of realistic lighting, and it's nice to know that it's making its way into real-time apps and games.

Support for working with lots and lots of geo

Modern game engines are able to process millions of triangles, efficiently parallelizing processing which results in smoother, more polygon dense models, including skinned and dynamic ones, like characters and soft-bodies. In many cases real displacement can either be directly baked into the models or dynamically applied with polygon tesselation. Latest DirectX also supports geometry instantiation, so unless you're limited by fill-rate or complex shading trees, you can fill your scenes with lots and lots of dense meshes.

Real-time fluid, cloth and physics simulation

Well, duh. These are game engines after all! Simulation brings life into games and should also work for cinematics.

Physics libs that come with Unreal Engine nowadays are also good, as far as I can tell. There are impressive physics assets available for Unity (CaronteFX) as well as Unity/Unreal (FleX), which can do all kinds of simulations: rigid, soft-body, cloth, fluid and particles. CaronteFX is especially interesting since it is positioned as a real production-quality simulation multiphysics solver developed by Next Limit. You know... The guys behind RealFlow. It's not strictly speaking real-time, but the idea is to utilize the interactive possibilities of the Unity game engine to drive simulations and then cache those for playback with little to no overhead.

Since I don't have much experience with dynamics in games engines, I'll have to study this aspect better and will not delve into this topic for now.

Real-Time Ray Tracing

In April 2019 developers of the Unreal Engine 4 finally added real-time ray tracing support to the engine. You can read more about that in the following blog post at NVIDIA. Thanks to the GPU hardware getting much more powerful over the years as well as receiving specialized compute blocks targeted at ray tracing calculations and temporal filtering tricks, you can now actually make some use of ray tracing in real-time and enhance the realism of your scenes with pretty much just a couple of clicks in UE4, to get sharp or area shadows, realistic mirror reflections and even some sweet global illumination. By combining traditional rasterization for the majority of the shaders used in the scene with a couple of ray traced frame buffers you can get another step closer to that genuine "CG" look for your project. Except in real-time! Color me impressed.

Unity devs are also experimenting with ray-tracing:

As of May 2020, only one of the two – that being Unreal Engine 4 – provides such GI method out-of-the-box in the stable retail branch, with Unity lagging behind, still experimenting with GI in their external test Editor build branches.

If you're interested in finding out how real-time ray tracing works and what's possible with it, I have a blog post dedicated specifically to answer those questions — Reality check. What is NVIDIA RTX Technology? What is DirectX DXR? Here's what they can and cannot do.

A wide variety of post-effects

Like cherry on a cake these effects turn mediocre renders into nice-looking ones, and good renders into freaking masterpieces.

Sub-surface scattering

Real-time SSS is mostly available as a screen-space effect. Unreal Engine absolutely tramples the competition when it comes to SSS. Just take a look at this demo:

Sure, it's not just the materials. but also the rig and the blendshapes which add to realism, but subsurface scattering approximation is paramount for achieving believable results when portraying a wide variety of real-world materials including skin, wax, marble, plastic, rubber and jade.

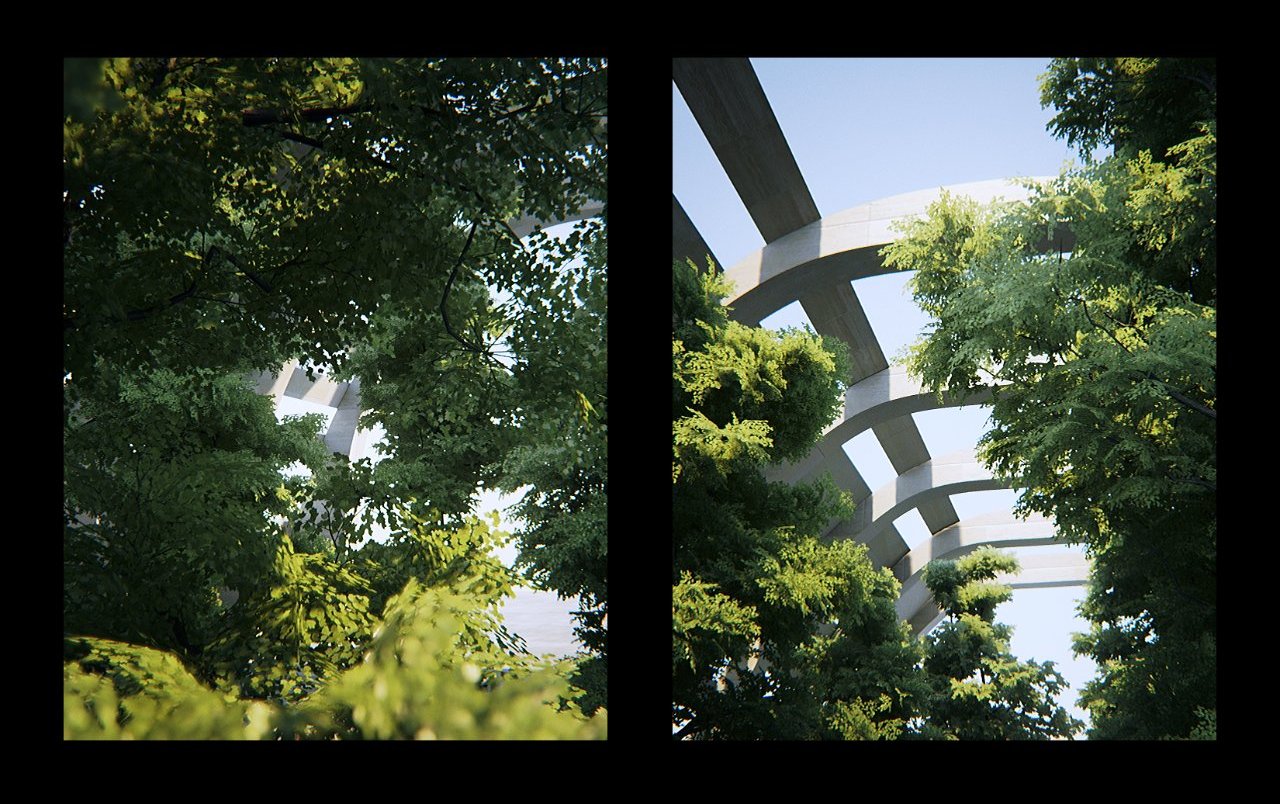

Depth of Field

Real-time Depth of Field has come a long way. Now we finally have access to some more or less good-looking implementations. Not the awful ones like we used to see in the first Crysis or many console games of the time, for example. Some seriously awesome and very clean DoF, especially when foreground objects are in focus. Still, since it's a post-effect and not real ray-traced DoF, there are certain limitations:

Due to DoF being a post-effect applied over an already rendered image, trying to apply too much blur to foreground objects will not look particularly good. It's a problem similar to that of any other video DoF filter like Lenscare by Frischluft. The solution would be to render out two passes to later comp the blurred foreground over the focused render.

Anti-Aliasing

Come on! Can you really live without it?

Combine Temporal AA with Super-Sampling and you have a beautiful, perfectly clean, flicker-free image which would take hundreds if not thousands of samples to achieve with a path tracer. And all of that goodness – in real-time!

There are some amazing examples and assets of TAA available, like CTAA for Unity by Livenda:

Although I don't quite understand the reasoning behind such a high asset pricing (a whopping 350$), it doesn't change the fact that this may very well be the best TAA I've ever seen (yes, including excellent Naughty Dog's TAA used in Uncharted 4). Still, from the looks of it even TAA, that comes with Unity's original Post Effects stack, looks pretty good. It took a long time, but we can finally get supersampled-like images from a realtime engine.

This reminds me of a another great Unity asset available on the Asset Store: MadGoat SSAA and Resolution Scaling (basically an actual supersampling filter with a fancy name).

It's especially useful in our case since it's not geared towards games as much as designed to be used to render cinematics and films at the best possible quality.

Chromatic Aberrations

Whether you're a fan or not, CA really adds to realism by simulating real-world lens optical imperfections. I like it!

Motion Blur

I love MB. I consider it the most important phenomena when it comes to cinema in both real and virtual scenes. IMHO, Motion Blur adds "substance" and "weight" to the overall motion flow of the scene. There are many implementations of real-time MB available, and it only keeps getting better with each iteration.

On the screenshot above you can see how DirectX 11 allows for a much cleaner separation of objects and motion vectors when applying MB over a rendered image. Comparison courtesy of geforce.com.

Screen-Space Reflections

Real-time SSR is a controversial topic. In theory it's a great idea to utilize already rendered screen-space data to calculate reflections, but there are many, many cases where SSR technique simply falls apart. You can read more about these issues in this article.

All in all, screen-space reflections look great when they work, but I think more times than not spherical reflection probes may fair better, especially if you make them update every frame. It will be expensive, but will provide you with reliable source of data for reflection mapping. Once again, we're focusing on looks here and not performance.

Color grading tools and HDR tonemapping

Color grading can make or break your creation. It can help transform ordinary renders into pictures with "substance" and "mood".

Modern game engines provide artists with lots of tools to grade the hell out of any scene:

Looks similar to grading tool-sets available in DaVinci Resolve or Colorista doesn't it? Grading can be applied on per-shot basis, so in theory you should be able to pretty much cut or "edit" your shots right in the time-line editor of your game engine and apply post and color-correction simultaneously.

Volumetric effects

Yes, this too is perfectly possible and can add more substance to your shots. It may be a little finicky to set-up, but current implementations are almost completely flicker and shimmer-free and look damn good.

A couple of others

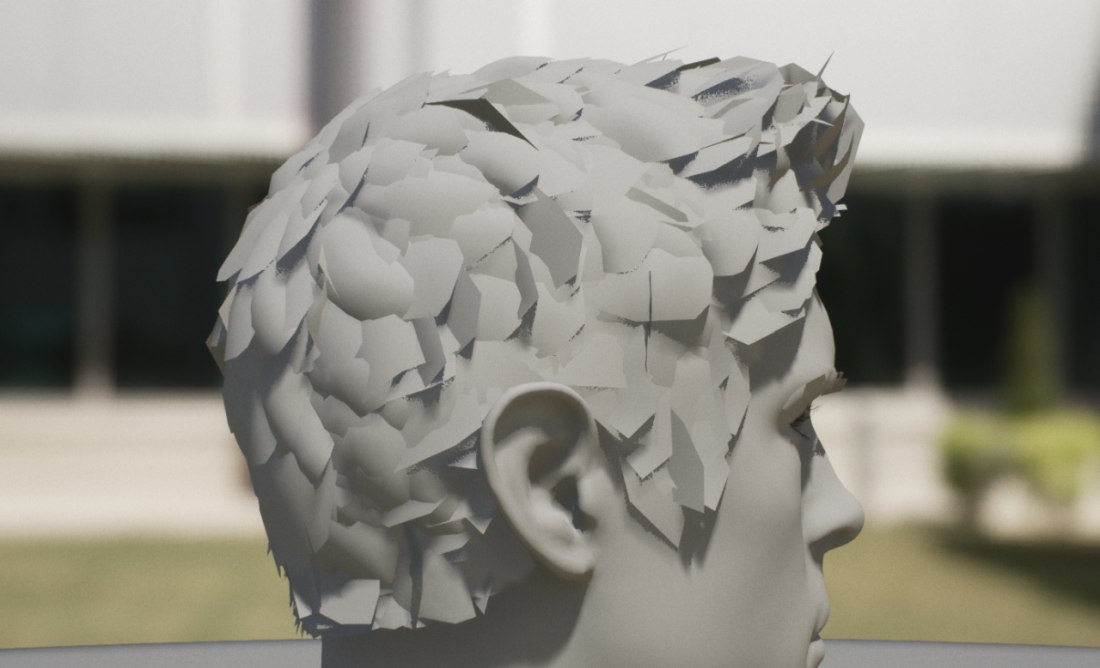

Hair and fur

Can't say hair and fur real-time rendering techniques really evolved in publicly available modern game engines. Most of the time we still see good ol' static polygonal hair or, if you're lucky, a couple of skinned pony tails and polygonal hair strips or two animated with procedural turbulence.

This is really puzzling since NVIDIA demonstrated great real-time dynamic hair back in 2004 in it's interactive demo named "Nalu". And that was at the time GeForce 6800 was considered one of the fastest GPUs. You'd think it would make it to the game engines sooner than later, but no!

The demo ran at pretty high frame-rates on GF6800 and I'm truly baffled we still haven't seen anything like it even in the most recent games created in Unity/UE/Cryengine, since NVIDIA GTX 1070 for example is over 10 000 percent faster than GeForce 6800! Modern GPUs are more than capable of such hair rendering techniques, but we're still seeing the good old non-planar polygon hair without much simulation (regardless whether the hair is short or long). Granted, shading techniques have gotten better, but it doesn't change the fact that we're still pretty much shading billboards!

There have been some real-time hair developments like AMD TressFX, PureHair and NVIDIA HairWorks which look great, but aren't easily available in any of freely accessible game engines as far as I'm aware.

I have a lot of questions regarding real-time hair and will spend more time studying the techniques and available tools in the near future.

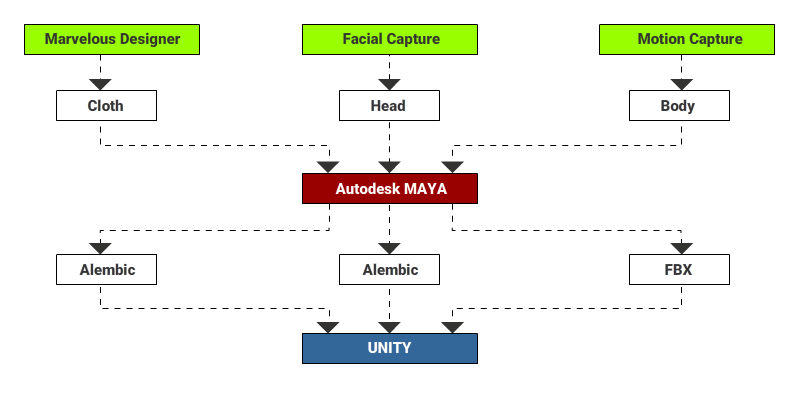

Industry-standard formats and overall pipeline integration

Several years ago I wouldn't dream about making Unity or UE a part of an animated film pipeline and not a usual interactive charade or a cold storage for completed game assets. Yet, here we are.

Current versions of Unity and UE support well established interop formats both for import and export.

Example of a pipeline utilizing Alembic from the Unity blog

They both can import and playback Alembic cache files. In case of Unity, Alembic support was first developed for the Short Animated Film named The Gift by MARZA:

Unity team quickly realized the potential of the asset and Alembic support was later officially integrated into the very Editor, and the source code made available. As is Alembic for the Unreal Engine.

Then there is export. I remember doing all sorts of cool things in Unity and being bummed out that I couldn't easily export those into Softimage for rendering. Now Unity supports exporting to Alembic and FBX! And that includes animation and most geometric object types as well as cameras.

Sure, exporting 3D files and caches is fine and all, but the most important export is the one that pops out those pretty pictures onto the storage drive! I mean if you really try to squeeze out every bit of goodness out of your real-time game engine you will not probably get very "real-time" framerates, so ordinary screen-capture software won't cut it anymore. Hence you'll still need to capture those frames somehow, right?

Well, you're covered! Unreal Engine Sequencer supports rendering cinematics with separate passes and into several image formats, including OpenEXR! Unity doesn't provide the same tool-set, but (surprise-surprise!) you can almost always find a asset on the Asset Store to do the job! In this case it would be the Offline Render. It can not only export passes for each frame, it also supports off-screen rendering which means the resulting resolution of your renders won't be limited by your screen resolution.

It's no wonder then that such giants as Next Limit make Unity their first choice as a platform for the aforementioned production physics framework. With all the possibilities Unity gives to set up and direct simulations and object relations/parenting and such during playback and an integrated ability to easily export those results, I think we'll be seeing more and more cool non-game related stuff getting Unity interop support. Like Octane for example. Yes! Octane renderer has an official Unity integration now. And the full-featured version for 1 GPU is actually available for free.

Still NOT a silver bullet

All in all, graphics hardware capable of doing stuff seen in Unity and Unreal Engine tech demos as well as the Software itself which can finally utilize this power better than ever before is a duet to be reckoned with. Add real-time simulation and support for playing back point cached meshes for rendering and you have an interactive creative environment never seen before.

Don't get me wrong though. Real-time engines don't magically cut the film pipeline in half. Content creation is still there as well as the direction, camera work, lighting e.t.c. The biggest difference is that doing this in, say, Unity instead of Softimage+Redshift makes it oh so much more interactive simply because you can pretty much see the final rendered image at any given time without going through minutes or even hours of render time.

In the end it's just more FUN! And I will sure take as much incentive as I can get when it comes to working on such a complicated personal project as an animated film.