New Unity 2019 2D Sprite Rigging and Lighting Tools

With the latest 2019.1 update Unity team have seriously upgraded the 2D tool-set of the editor:

Seems like a lot of those tools were inspired by the Rayman Legends engine. Something I was drooling over 5 years ago, hoping one day Unity would implement some of those. And they finally did!

It really makes me all warm and fuzzy inside, remembering how most of those automated 2D tools and thingies were not available when I was working on Run and Rock-it Kristie in the freeware version of Unity 4 (which back then lacked even the Sprite packaging functionality!) and had to be either developed from scratch or achieved with some cool Asset Store stuff you would need to purchase and integrate into your product.

Oh, the tools... The tools...

IK and rigging with bone weighting? Check:

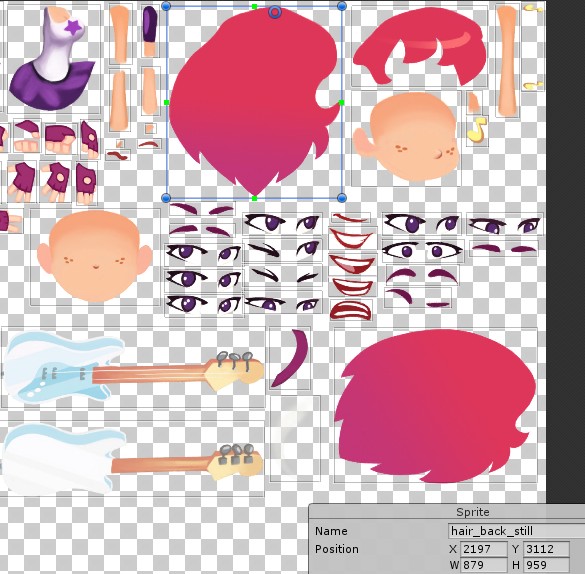

Manual sprite atlasing and set-up? Check (atlasing is vital for mesh batching and draw call reduction):

Sprite assembly and animation? Check:

Dynamic level-building tools? Check (with a modded version of the Ferr2D asset):

Dynamic lighting? Check! — With up to 4 live vertex lights and camera-distance based light culling to make sure the game would run at stable 60 fps on iPhone 4 (heavily reliant on the amazing but now deprecated Core Framework asset by echoLogin):

It was... Fun!

You know what I also remember? I remember almost every day working on the game I felt excited and driven. It was FUN. Even when it was challenging to solve some technical issues (there were a lot of those, especially for a beginner) or work around Unity limitations or bugs, it felt truly rewarding and would give such a powerful motivation boost that I would continue working until the end.

Until the release.

But the "serious" CG movie stuff I'm doing now?.. Honestly? Meh. It's so slow and clunky compared to my previous game development experience. Everything needs to be either cached, or rendered... I'm also having some grave issues with hair simulation which I wasn't able to overcome for the last 6+ months, many operations in the "classic" world of 3D editors are still either single-threaded, unstable, or require some very specific knowledge or particular and elaborate set-up... It's almost no fun! No fun means much, much less motivation to continue.

It's a problem.

Therefore this July I will instead be checking out the latest Unity Engine and see whether most of what I'm doing right now could be ported into Unity. Starting with simulation and scene assembly and hopefully — ending up animating, rendering and applying post effects right within the Unity Editor where everything is real-time and fun! I miss the real-time aspect! Oh boy, do I miss the ability to tweak materials and see the more or less finalized render of the scene even during assembly. The ability to import assets and build "smart" prefabs (like Softimage Models, but with interactivity and intrinsic scene-aware scripting via MonoBehavior) e.t.c...

I miss you, Unity!

Unity and Unreal Engine: Real-Time Rendering VS Traditional 3DCG Rendering Approach

(Revised and updated as of June 2020)

Preamble

Before reading any further, please find the time to watch these. I promise, you won't regret it:

Now let's analyze what we just saw and make some important decisions. Let's begin with how all of this could be achieved with a "traditional" 3D CG approach and why it might not be the best path to follow in the year 2020 and up.

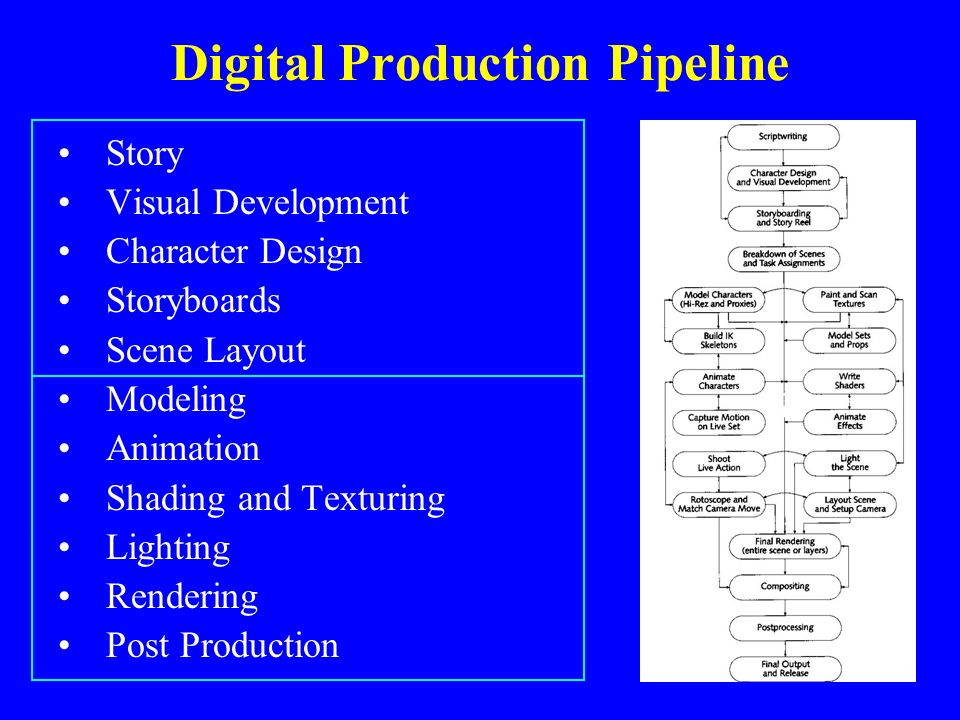

Linear pipeline and the One Man Crew problem

I touched upon this topic in one of my previous posts.

The "traditional" 3D CG-animated movie production pipeline is quite complicated. Not taking pre-production and animation/modeling/shading stages into consideration, it's a well-known fact that an A-grade animated film treats every camera angle as a "shot" and these shots differ a lot in requirements. Most of the time character and environment maps and even rigs would need to be tailored specifically for each one of those.

Shot-to-shot character shading differences in the same scene in The Adventures of Tintin (2011)

Shot-to-shot character shading differences in the same scene in The Adventures of Tintin (2011)For example if a shot features a close-up of a character's face there is no need to subdivide the character's body each frame and feed it to the renderer, but it also means the facial rig needs to have more controls as well as the face probably requires an additional triangle or two and a couple of extra animation controls/blendshapes as well as displacement/normal maps for wrinkles and such.

But the worst thing is that the traditional pipeline is inherently linear.

Thus you will only see pretty production-quality level images very late into the production process. Especially if you are relying on path-tracing rendering engines and lack computing power to be able to massively render out hundreds of frames. I mean, we are talking about an animated feature that runs at 24 frames per second. For a short 8-plus-minute film this translates into over 12 thousand still frames. And those aren't your straight-out-of-the-renderer beauty pictures. Each final frame is a composite of several separate render passes as well as special effects and other elements sprinkled on top.

Now imagine that at a later stage of the production you or the director decides to make adjustments. Well, shit. All of those comps you rendered out and polished in AE or Nuke? Worthless. Update your scenes, re-bake your simulations and render, render, render those passes all over again. Then comp. Then post.

Sounds fun, no?

You can imagine how much time it would take one illiterate amateur to plan and carry out all of the shots in such a manner. It would be just silly to attempt such a feat.

Therefore, the bar of what I consider acceptable given the resources available at my disposal keeps getting...

Lower.

There! I finally said it! It's called reality check, okay? It's a good thing. Admitting you have a problem is the first step towards a solution, right?

Right!?..

Oups, wrong picture

Oups, wrong pictureAll is not lost and it's certainly not the time to give up.

Am I still going to make use of Blend Shapes to improve facial animation? Absolutely, since animation is the most important aspect of any animated film.

But am I going to do realistic fluid simulation for large bodies of water (ocean and ocean shore in my case)? No. Not any more. I'll settle for procedural Tessendorf Waves. Like in this RND I did some time ago:

Will I go over-the-top with cloth simulation for characters in all scenes? Nope. It's surprising how often you can get away with skinned or bone-rigged clothes instead of actually simulating those or even make use of real-time solvers on mid-poly meshes without even caching the results... But now I'm getting a bit ahead of myself...

Luckily, there is a way to introduce the "fun" factor back into the process!

And the contemporary off-the-shelf game engines may provide a solution.